My response can be seen online here. Interacting with the webpage by clicking with the mouse will change the image on the screen, as well as generate a new line of text

Theme :

When thinking about the theme of Play and Self, my first thought was about self image, and I considered making something with portraits, and trying to use an AI text to image application to create images that -could- be of me, by feeding it more and more detailed text descriptions as inputs. The results didn’t feel very interesting or playful however, so I spent a bit more time thinking about the theme. I started thinking about the way that people present themself online vs offline, which led me to start thinking about the personal data that people create through the use of social media. I decided I wanted to use the data that I could pull from my personal twitter, which I’ve had since I was a teenager, and have used extensively since my early 20s, and try to use that data to generate new text.

Method :

I requested an archive of my personal data from Twitter. Part of this archive was a json file that had all 60,000 tweets I’ve made, as well as all the associated metadata. I only wanted the full_text component of each tweet, so I followed a tutorial for importing this json data into Microsoft Excel, and filtering out the data I didn’t need. I also removed all the text for tweets that I had retweeted, ones that were direct replies to other people, or ones that contained any hyperlinks, which cut my dataset down to 17,000 lines of tweets, which I saved as a text file.

I then did some research into RiTa.js, which is a Javascript library for language processing and text generation, which I haven’t used before. I was able to find a code example that used both RiTa.js and p5.js (a coding framework I’m more familiar with) that created markov chains from source texts on mouse click, and displayed the result as text on the screen. I incorporated the tweets file as a source text, and then adjusted some of markov chain generation variables until I was getting lines of text I was happy with (where the sentences being generated weren’t entirely nonsensical, but I couldn’t see entire tweets just being generated verbatim).

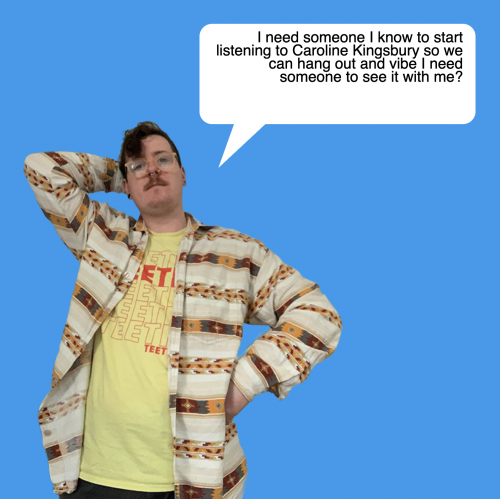

With the text generation working, I started to think about how I wanted to present it. Because the source text was all things that I’ve said online, I thought it would be fun to have me “speak” the text. I changed the background of the sketch to be Twitter blue, and stylized the text so it would look like it was appearing in a speech bubble. I then looked at some pose references for character sprites in visual novels, and took a series of photos of myself recreating the poses. I removed the background from these photos, and then added the cutout figures into the p5.js sketch, so that each time a new line of text is generated a different pose is displayed on the screen.

(EDIT 20/10 - I noticed when trying to view the website in preperation for Folio 2 submission that the website wasn't loading. I think an update to either the P5.JS or Rita.JS libraries, or an update to the hosting settings at neocities.org has broken the sketch in some way. I was able to get it working again by reducing the amount of text in the tweets.txt file from 17,000 lines to only 2,000, and rehosting the site on glitch.me . The text generation isn't as varied now, but it still gets the idea across)

Context:

My response uses markov chains to algorithmically generate new combinations of text from the corpus of my twitter archive, but the process of using existing text as a base to generate new work is one that predates the use of computers. Earlier related practices include the cut-up technique used by Dadaist writers and poets such as Tristan Tsara, where ‘texts derived from existing writing or printed sheets that are then dissembled or cut up at random and reassembled to create a new text.’ (Flanagan, 2009), or the diastic (or spelling-through) technique pioneered by Jackson Mac Low as a composition method for writing poetry, using a source text and a seed phrase (Hartman, 1996).

The use of my own personal text for this process makes my response a type of solitary play (Sutton-Smith, 2001) as it relates to writing, reading, and journaling. When viewing the output of the sketch, part of the humour and fun of it was seeing the recognizable snippets of text mashed together in unexpected ways.

While developing the sketch and thinking about how I would present it, I was drawn to Darius Kazemi’s ‘Quantity’ talk from the 2014 Eyeo Festival, where he outlines a framework of templated authorship, random input, and a framing context, for producing procedurally generated content that doesn’t become boring for the viewer. With my sketch I already had the text template and the random input, and this framework pushed me to polish the visuals further than merely displaying text on the screen.

Reflection :

Overall I’m pretty happy with my response this week. Using tweets as a data set to generate more tweets isn’t a particularly unique concept (hence the proliferation of e_books style bot accounts) but I think my presentation of the generated text is a lot of fun, and I enjoyed experimenting with a new coding framework.

References :

Flanagan, M., 2009. Critical Play. Cambridge: MIT Press.

Hartman, CO 1996, Virtual Muse : Experiments in Computer Poetry, Wesleyan University Press, Middletown, CT. 06459. Available from: ProQuest Ebook Central. [27 August 2022].

Kazemi, D., 2014. Quantity - EYEO Festival 2014 talk. [video] Available at: <https://vimeo.com/112289364> [Accessed 27 August 2022].

Sutton-Smith, B., 2001. The Ambiguity of Play. Cambridge, Harvard University Press

About This Work

By Eamonn Harte

Email Eamonn Harte

Published On: 03/08/2022