Theme:

Sound is becoming increasingly important for me in my work. The more I pay attention to games I love, the more I ask what makes them enjoyable, satisfying, impactful, the more I am appreciating the role audio plays. In The Art of Game Design (2015), Schell raises a few interesting points on the role of audio in games, “Audio feedback is much more visceral than visual feedback and more easily simulates touch.” On suggesting that a designer add music to their game as early as possible, “If you are able to choose a piece of music that feels the way you want your game to play, you have already efficiently made a great many subconscious decisions about what you want your game to feel like, or in other words, its atmosphere.” (Schell 2015, p390)

Key Questions:

- How can I create a visual or gameplay feature which responds to and complements input audio or music?

Context:

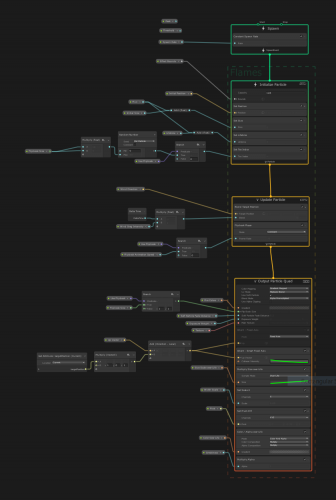

This week I’ve been exploring the VFX Graph in Unity. I’ve previously concerned myself more with learning gameplay mechanics and programming as that is where my main passions lie in the field of games design. Unity have been developing programmable rendering pipeline features into their engine for a few years and have recently begun releasing visual scripting tools to support development within these features; the shader graph and VFX graph tools.

As part of my learning to use these tools I decided to explore a method of visually interpreting music with the VFX graph, intending to create a few visual effects which would be triggered by beats and significant points in the music.

Method:

- I researched a number of music-mapping and beat-mapping implementations in Unity and found a piece of work which seemed like exactly what I was interested in here

- I created a scene in Unity using the High Definition Render Pipeline (HDRP) as it is the more versatile of the two pipelines compatible with visual effects graph (the other being Universal Render Pipeline) and implemented the project files from the open source work in the article, including the music which was provided

- I had to manually recreate the visual assets from the project so they would be compatible with the HDRP

- I created a simple visual fire effect in the scene using HDRP sample assets and with some guidance from this Brackeys tutorial on smoke and fire here

- I added a simple light to the scene I could use to plug sound inputs into, mostly for testing purposes

- I added a sphere with a textured material to make it easier to see lighting changes

- I began creating methods in the audio analysis code that I could connect to input parameters for the VFX graph with mixed results

Reflection:

This project is somewhat incomplete. I managed to get the light to respond to the audio input fairly well but I’m still not satisfied with the way the VFX fire is responding. It’s hard to see weather the VFX graph is being affected by the audio input or just behaving as it did before. I would like to work more on this concept in the future as I think this kind of audio-visual relationship could really bring a lot to certain gameplay experiences.

References:

Brackeys YouTube tutorial, "Smoke and Fire", https://www.youtube.com/watch?v=R6D1b7zZHHA

Medium Article; Algorithmic Beat Mapping in Unity: Real-time Audio Analysis Using the Unity API, https://medium.com/giant-scam/algorithmic-beat-mapping-in-unity-real-time-audio-analysis-using-the-unity-api-6e9595823ce4

Schell, J., 2015. The Art Of Game Design: A Book Of Lenses. Boca Raton, FL: CRC Press.

About This Work

By Nick Margerison

Email Nick Margerison

Published On: 06/05/2020