THEME

Play and Self

Context

In terms of the theme ‘Self’, I thought about how in this modern age of technology, people get to define themselves however they like. As a concept, I considered Self alongside Choice; not being defined by features you have no control, but by customizing and presenting yourself with features you choose. It reminded me of the concept of ‘fluid identities’ as described by Jim Blascovich, as reflections of yourself that you can change.

Things online have evolved a lot from just being able to change your username and profile picture to programs like Facerig that can actually detect a person’s facial features and transfer them onto a virtual avatar that will move and speak accordingly.

This has led to a boom in what people call ‘vtubers’ or ‘virtual youtuber’, where people use face tracking software and custom models that lets them embody themselves as a digital avatar. A digital avatar that is perhaps better at capturing a person’s sense of identity, than their actual physical form, as suggested by John Luxford.

The model can be made either in 3D or 2D, but I’m more inclined towards 2D graphics, because I have more experience with 2D illustration than 3D modelling. Moreover, the aesthetic of anime graphics is more appealing to me as a way to characterize myself.

Method

My original idea was to have something that would detect the facial features of the player through a webcam and have that translate onto the face of the virtual character and shift accordingly. Unfortunately, it is way too early for me and my coding skills to take on a project of that calibre. That is why I decided to have create a video simulation of it instead.

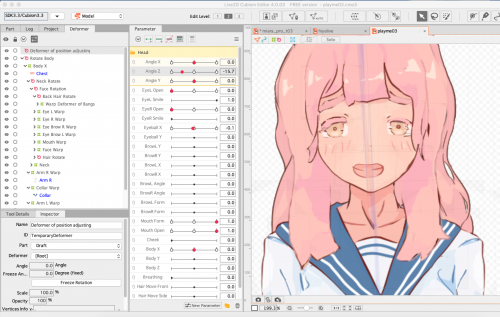

I have been exposed to live2D models through animated artwork and vtubers, but I have never actually tried to make one before.

The illustration part was rather time consuming, because I had to draw each movable element on different layers, so that I could make convincing movements on Live2D. Once I imported the psd file to Live2D I had to attach warp and rotation deformers for the necessary layers.

Once I set everything up, I recorded a generic video of myself to observe and mimic the movements through keyframing parameters with the Live2D model.

Response

The model moved as I intended but its depth and range were pretty limited. It was my first time using Live2D and Live2D modelling is notoriously time-consuming, so I didn’t finetune it too much. I did learn a lot about the program as a whole through my experiments with it, such as the importance of exaggeration in places the details are lacking.

Observing myself, and my idle movements informed me of the many different minuscule movements that work together to make a character move.

Even though the idea was to experiment with the unfamiliar, I do regret not being able to produce an object that better reflects my initial concept of self and choice to change.

References

Digital freedom: Virtual reality, avatars, and multiple identities: Jim Blascovich at TEDxWinnipeg

The Digital Identity Revolution | John Luxford | TEDxWpg

#kaykay #kkapdwk1